History:Track Grouping Proposal

| Status: This page describes a failed proposal. It is not official, and should only be used, if at all, as the basis for a new proposal. |

|

|

Status: This is a proposal for a database schema change that will allow grouping tracks that have something in common together on several levels. It was started by Shepard, and was made a collective working draft by DonRedman. If it gets sanctioned it will probably be part of the NextGenerationSchema.

- Update: Since this page is getting too long to edit, we are moving the content over to a new page: ObjectModel

Note: this is NOT about groups of tracks on albums (like several parts of a song or movements). You can find some thoughts about this on TrackGroupings.

Why grouping?

Ever tried to create a instance of CoverRelationshipType? It's a mess! You have to look up the earliest release and if the cover is released on several albums, link all of them together (and also should normally link all of the original releases together). But the real problem unfolds if you try to trace back such a relationship chain. Was this song covered? -> Look up the earliest release and see if it has a cover relationship. If you want to see the real mess, I propose you read DontMakeRelationshipClusters (and perhaps also LinkingDifferentArtistNames).

The problems listed there can be solved simply and effectively by adding group entities to the database which represent a more abstract layer of what the member objects have in common. That does not only apply to tracks but also to albums and artists (see AlbumRework and AdvancedEntity). This group functioning as a representative can be used as target of AdvancedRelationships, such as CoverRelationshipType.

Developing a group model for tracks

If you want to group tracks, you have to see that you cannot simply say "track A, B and C have the same name so group them". You actually need several layers which build a hierarchy - becoming more abstract from the bottom to the top.

The following layers are very fine grained. They are actually too fine grained on purpose. We will think about which layers can be merged later. Let's start to roll this up from the top, where it starts in the artist's mind:

Song

The most abstract thing is a song. The artist(s) develop the idea for a song and at this point it is an abstract thing you cannot touch.

So a song is an abstract idea. Does it have a name? Not necessarily so. We could even argue that it is not factual data.

Composition

The song becomes factual when the artists start composing and writing lyrics. This is more "touchable" as you can see the lyrics and notes. So, let's define a composition as something that can be put down on a score.

Actually a composition is an object of national law. A composition is considered a "work" of art and is copyrightable.

Song -- Composition

- A song can have different compositions (1--many). Sometimes songs are recomposed in a different way (for acoustic performance for example) or lyrics are changed, but the basic idea stays the same. If an idea is not made explicit by composing, it is of no interest to MusicBrainz. Therefore a song must have at least one composition.

- For sake of simplicity we can say that a composition can only be based on one song. Is a composition always based upon a song? That is, does it have exactly 1 or 0--1 songs? If the song is an abstract idea, we can say that a composition is based on exactly one song (1)

- Or should we allow a composition to be based on multiple songs? At this point the abstract idea of a song is becoming wobbly. We'd need some examples here.

Arrangement?

If we look into the very fine-grained details, then we could add a level of arrangement here. The distinction between two different compositions of one song and two different arrangements of the same composition is minimal. The main difference is in the person who does it.

- The composer composes a work and is its "owner".

- The arranger takes an existing composition and adapts it to a specific kind of performance, but he does not perform it.

Note that the arranger can be the same person as the composer; or they can be the same person as the performer. The latter would be a case of an implicit arrangement. The difference is that arranging is a purely virtual process, while performing is something concrete. That means that an arrangement can be less "touchable" than a composition, or as "touchable" if it is set in score.

Composition -- Arrangement

- If we use the concept of implicit arrangement, we can define that a composition has at least one arrangement. It can have more arrangements (i.e. be rearranged), too. the relations is 1--many.

- An arrangement must be based on a composition. And it must be based on exactly one composition (1). That is an arrangement includes the concept of a composition.

- We should not allow an arrangement to be based on multiple compositions. Arranging is a very simple and straightforward process. It might however become necessary to allow a composition to use other compositions. Again, we'd need some examples to decide this.

Recording/Performance

After that, the artists start producing audio material - either by recording a song in a studio or by performing it live (where it is then also recorded - as we don't care about the unrecorded ones in MusicBrainz). The song may be re-recorded in the studio or, as said, played live - then you have different recordings/performances using the same arrangement so that builds the previous layer. A recording is "listenable" but not "touchable", although it has to reside in some medium. It consists of analog or digitally stored data. Also the same recording can be recognized by human ear (i.e. That remix uses this recording of "Strange Fruit" done by Billie Holiday).

If a song is being covered, the performer changes and a new recording is produced but the composition stays the same. In some genres (e.g. Jazz) the arrangement often happens during the performance. We have called that implicitly. Still they stay different processes: The arrangement consists of distributing notes to instruments, and the performance consists of playing these notes.

At the end of a performance we have, for the first time in this hierarchy, something that you can listen to. For the sake of clarity we will define the process of recording as the first point at which the audio information is stored. Everything that happens with the stored audio is part of the next steps.

Arrangement -- Recording/Performance

- An arrangement can have multiple recordings/performances. It must have at least one to be of interest to MusicBrainz (1-many).

- Now this gets tricky. How many arrangements can a recording/performance have? The question here is when does a performance begin and when does it end.

- If we define a recording to be a "take", then it can contain multiple arrangements. E.g. if a musician plays a medley in one take, their performance will be based on multiple arrangements.

- If however we define a recording to be the performance of an arrangement, then we define a cut in the take at the moment the musicians move over to the next arrangement. This is however problematic, since the musicians could fade form one arrangement to the other or even play two at the same time.

Therefore we have to allow multiple arrangements to one recording/performance. Since there are recordings of birdsong or crowd noise, it is possible for a recording to have no arrangement (0--many).

- (Note that the database could contain some special arrangement entities for these special cases, so on the database level this could be changed to 1--many.

Mix and/or Master

Are you still with us? By now the performance has reached a form of stored audio. Now we have two steps that happen within this stored audio: mixing and mastering[1].

First, the audio is mixed, edited, or engineered. This "mixdown" consists of taking the single 'tracks' (TonSpuren) of the take, applying effects and mixing them together. This is a different process from the mastering (next layer), in which a single track is adapted to other tracks of a set in loudness, sound, and length, and might be faded in and out.

However both mixing and mastering are processes that take stored audio material and produce other stored audio material. Defining the exact boundary between these two layers is tricky.

- A mix is independent of the set on which the audio will be released (but how do you determine that? Is this really true for the creative process involved?). It is also independent of the actual length that is stored. That is, an album version and a radio edit of the same recording (faded out, with no further changes) are the same mix.

- A master groups entities that contain the exact same audio (which includes their duration and fading). Mastering is the last time the audio is edited before it is fixed on a medium, and it is the last time in this hierarchy that we deal with media-independently stored audio.

These two entities will probably have to be merged. However, we differentiate them here, because they fulfill different roles:

- Mix and master combined is broad enough to be the target to all AdvancedRelationshipTypes that deal with any kind of mixing (Remix Relationship Class, Compilation Relationship Class, Engineer Relationship Type),

- Mix alone is useful for grouping different tracks of the same mix. If you want to group remasters of the same mix or DJ-mixes, then you have to keep mastering below the grouping entity.

OK, this really is splitting hairs. It serves one purpose, though: To make clear that there are two boundaries between a mix and a track. If you choose to take "exact same audio" as a boundary (which is the cleanest solution), then the resulting mix/master entity is not useful for grouping different mixes and masters of a song.

If we look at remixes, we will notice an additional difficulty: A remix produces a mix/master. It can, however, be based on a recording/performance or on another mix/master. This effectively makes it a re-mix. So we could keep remixes out of this hierarchy and describe them by Mix-Mix AdvancedRelationships.

Recording/Performance -- Mix and/or Master

- A recording/performance can be engineered into multiple mixes. This happens regularly on singles, which release different mixes of the same performed song. Again we can require a recording to be engineered into at least one mix to be of interest to MusicBrainz. The relation is 1--many here.

- Can a mix combine multiple recordings/performances? I fear the answer is yes, even if we exclude remixes. But we need some examples here.

- In http://musicbrainz.org/track/f2338d89-0851-4ad1-8a73-2c586c8860e3.html, Malia's interpretation is mixed with extracts from Billie Holiday's. Is this what you were looking for? --davitof

- A mix can be mastered multiple times and, again, at least once (1--many).

- A master is based on exactly one mix (1), otherwise we are dealing with a remix.

Track

OK so by now we have a masered audio which has not yet been stored on a medium. The moment it is stored on a medium, we call it track. That means that a track has an (optional?) position on a "disc" and a specific TrackTitle. We know by now that the exact same master can be released on two albums with different track titles. Tracks are what circulate in P2P networks, that is, a file is a track.

Tracks are not really useful for grouping. Actually it is questionable, whether they have to be represented as an entity in the database at all. They could very well be represented by a ordered relationship between an album and a master, if it were not for the different track titles.

Master -- Track

- A master can be pressed on multiple tracks, but again at least on one (1-many).

- A track is a pressing of exactly one master (1).

OK that was simple, what if we relate tracks to mixes (including the master into the track)?

- This is hypothetical only and should probably be deleted --DonRedman

- A mix can be mastered into and pressed on multiple master/tracks. That is 1-many as above.

- A master/track must be a pressing and master of exactly one mix. That is 1 as above, too. But the difference is that there are now many more master/tracks and they do not consist of the same audio anymore.

Uh? --DonRedman

Uh? --DonRedman

Ok, I have come this far to work over Shepard's distinction. I have made it more detailed and I have added real data-object relations. If someone installs the dot.py parser (available on ParserMarket, I will add diagrams. Then we should be able to discuss which layers can be merged, what data we loose if we do this and whether it is worth the simplicity, by editing the diagrams. In the meantime here is a png (I have demporarily dumped the dot source in /DotSource): --DonRedman

Applying the model to MB

Please note: The following is based on the first draft of the grouping description above. It has to be reworked with DonRedmans enhancement. Therefore we need to decide, which of the layers above we need.

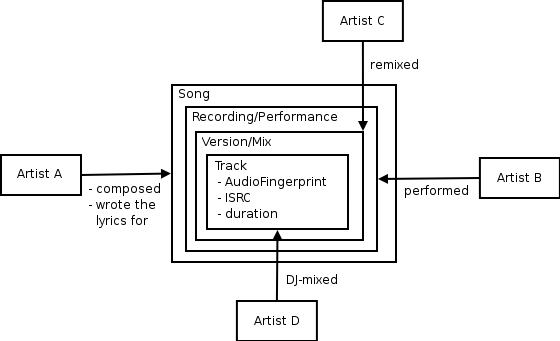

This all sounds OK but how to tell the users? And what's the use? OK, let's try to simplify it and see where we can add which fields and use what AdvancedRelationshipTypes.

First of all, those are too many groups. Not easy to use for our editors. What problems would we run into if we were to merge the song with the composition layer? For that, we have to examine how often a song is recomposed and how important it is to differentiate between the compositions for users. I don't know how often this happens with classical stuff, but for the rest, I guess it's rather rare. Like I said: acoustic versions, for example, could use another composition. So if we come to those cases, we could either ignore the small composition/lyrics changes and store them all under one song group - or we create two separate song groups if it's really important - like with covers: you would create two separate song groups for the original and the cover and link them with AdvancedRelationships.

Can we then merge the song layer with the recording/performance layer? No. One composer, different performers. That's important. Can recording/performance be merged with version/mix? No, the mix versions have completely different audio material and track titles and also often different mix artists. But can we merge version/mix with track? Tricky... depends on how accurate you want to be. The different tracks still can have different durations, so I'd say better not.

OK, at least we reduced it to 4 layers. Now what data can we store where? AdvancedRelationships now no longer have to be linked to tracks only but also to groups. Most types can even be restricted to certain groups. I'm doing this only for some classes/types now, will extend it later. A song group can be target of all AdvancedRelationships of the Composition Relationship Class and origin and target of CoverRelationshipType. Recording groups are target of the PerformanceRelationshipClass. Version/mix groups can be target of RemixerRelationshipType and origin and target of RemixRelationshipType.

What other data is there, apart from AdvancedRelationships? Well, the duration, for example. That clearly belongs to to the track level. Then we have audio fingerprints - it depends on the accuracy of those as to whether they should be attached to track objects or version groups. This is a decision of the developers. But there is one thing that is not under our control: The ISRC, the International Standard Recording Code. This is a (young) code system that assigns unified code numbers to recordings of songs which then come with the CD/whatever medium. We could store those as well. The only question is: how accurate are they defined, so on which level would they belong? http://www.ifpi.org/isrc/isrc_faq.html#Heading44 is quite clear about that and makes me think they belong to the track layer - but that has to be checked well enough.

You have to keep the following in mind: those groups are only for abstraction and attaching metadata non-redundant. They are helpers - nonetheless what albums and artists link to are still the tracks and none of the layers above. When presenting tracks though, they inherit all AdvancedRelationships from their super groups (makes it a bit slower) and also should present links to their super groups.

All in all it could look like this:

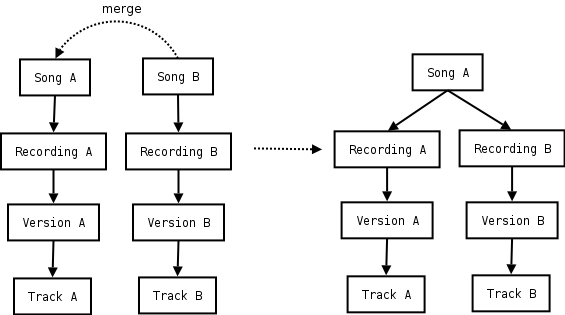

Converting to this model

This divides in two parts: the first is done automatically when converting to the new schema. For every track we have an new version group, recording group and song group. The AdvancedRelationships can then be easily moved to the correct group. The next step is the hard work for us: merging the groups. This should be an AutoEdit for AutoEditors and go to vote for normal users.

An example:

Problems

Fast and usable or non-redundant?

Requesting all the information from a track's super groups will make the album view slower anyway - as will all the db schema changes in AlbumRework, I guess. But there is one thing in question. Should titles be stored redundant? That is: if a TrackEntity stores its title, should the groups above too? Or the other way round? Should only the song store a title and all sub groups that don't differ from it have an empty title? That would reduce redundancy (less risk of inconsistency) but also be a bit more complicated to use and require more lookups in the database to actually retrieve titles.

Track renaming

Editing a track title for style reasons is no problem. But if a tracklisting is wrong and a track title is renamed to be a completely different song then this could lead to inconsistencies with the groups of that track. Therefore, there should be possibilities to change the groups of the track as well as an option "apply rename for version/recording/song group too" when editing track titles. Edits ignoring this normally would get voted down, of course, but this is the optimal case and won't happen for artists without subscribers.

One track multiple songs

We already identified several cases where more than one song/recording is put together in one track: mash-ups, medleys, megamixes and just multiple songs in one track (for example hidden bonus tracks). How is this modeled with groups? I see two possibilities:

Either the combined track can be member of multiple song groups (of course recording and version between). Then we have a direct linking to the original material used in the track - AdvancedRelationships like SamplesRelationshipType and MashUpRelationshipType would be nearly obsolete, the only use for them would be to provide the missing description for the type of combination this is.

The other possibility is: the combined track is just member of its own song group. The contained songs are linked to this song with the appropriate AdvancedRelationship. If they weren't released separately - like said hidden tracks - then simply an empty abstract song group is created and linked to the song group of the combined track with some AdvancedRelationship.

Merging dependency

Example: You want to merge the song and recording group for two tracks. The song group merge fails while the recording group merge goes through. What happens? It should fail dependency. Though better would be: merging groups of one layer automatically merges the connected groups of the higher layers. But this again leads to problems with multi-group-membership and with the following special purpose song groups.

Special purpose song groups

Analogue to the special purpose artists we would define several song groups that are used by many different tracks. Those can act as collectors for tracks of some types that where not composed and/or don't have lyrics, for example spontaneous performances on live acts or audiobooks.

Such songs could be: [silence], [instrumental], [solo], [jam], [spokenword], [reading], [narration], ...

- ↑ Note that in Shepard's original text the entity which is now called "master" was called "track".