User:Jacobbrett/Recordings: Difference between revisions

Jacobbrett (talk | contribs) No edit summary |

Jacobbrett (talk | contribs) No edit summary |

||

| Line 33: | Line 33: | ||

==== Recordings ==== |

==== Recordings ==== |

||

A conceptual entity which may represent a rough assemblage of studio or live takes, intended to be presented as a piece of recorded music. A bit "meta", unless it's representing a dry, one-track live recording. |

|||

Calculating nominal length |

|||

Viewing a recording (via web service or the MusicBrainz website) should also return the nominal mix (most commonly occurring on tracklsts, or a flagged mix--see below). |

|||

==== Mixes ==== |

==== Mixes ==== |

||

A particular arrangement of the elements of a recording. e.g. stereo mix, surround mix, overdub re-mix, karaoke mix. '''This is generally the level of granularity the consumer cares about.''' |

|||

We should perhaps have the ability to flag a single mix as the "nominal" mix, so that it may be returned with any request for a recording. It may be beneficial to particularly flag the album mix of a recording, so that it is better represented than a hypothetical hugely popular DJ remix. |

|||

How can one identify a mix? |

How can one identify a mix? |

||

| Line 41: | Line 47: | ||

==== Masters ==== |

==== Masters ==== |

||

A mix that has had it's audio levels modified to suit a delivery medium and/or artistic purpose. This closely represents the audio the consumer receives. '''Most people probably don't care about masters''' and may become overwhelmed with too many levels of granularity, so their existence must be veiled, unless explicitly requested, and be instead represented by their respective mixes. |

|||

| ⚫ | |||

Similar to above, it may be beneficial to flag a nominal master--this might be used to indicate a master that has suffered the least clipping and dynamic range compression. |

|||

gain |

|||

| ⚫ | |||

| ⚫ | |||

* Gain? |

|||

| ⚫ | |||

| ⚫ | |||

Display of master entities may be disabled--for editors and web services. Tracks would be shown to be related to mixes (track entities themselves would not be displayed either). |

|||

| ⚫ | |||

==== Tracks ==== |

==== Tracks ==== |

||

Represents (in the case of digital audio) the bits written to a medium that represent a waveform (e.g. uncompressed CD audio, decoded MP3 waveform). '''This is for the nerds.''' Again, the existence of such entities must be hidden, unless requested, instead being directly represented by their parent mixes. |

|||

How do we handle digital releases that represent multiple lossy encodings--new release and track IDs for each encoding? Do we ignore lossy vendors and focus on lossless releases only (in terms of creating new track IDs and recording metadata)? |

|||

=== The User Interface === |

=== The User Interface === |

||

=== Catch-alls === |

=== Catch-alls === |

||

These are the default entities recursively created when a new recording or mix is created. They are used by default when linking tracks, unless a more specific mix or master is created and used. |

|||

=== Extras === |

=== Extras === |

||

| Line 59: | Line 75: | ||

==== Acoustids ==== |

==== Acoustids ==== |

||

Attach Acoustids to mixes by default. If a master/track-level editor can verify an Acoustid as representing a particular master, remove the Acoustid from the mix (if it exists) and attach it to the master. The mix would inherit the Acoustid, so the general user would see no difference. This may be repeated for the same Acoustid if it belongs to several masters. Semantically, the Acoustid should always be represented at the mix level to allow for ambiguity. |

|||

| ⚫ | |||

| ⚫ | |||

Waveforms |

|||

The following might be processed by Picard: |

|||

| ⚫ | |||

* Waveform hash |

|||

Length calculation (strip silence) |

|||

| ⚫ | |||

* Low-resolution visual waveform (GIF/PNG data) |

|||

* Length calculation in ms (perhaps more universal?) or frames (after stripping silence from start and end) |

|||

Revision as of 09:29, 7 January 2013

This article is in the process of being written and refined. It is heavily inspired by the thoughts of many others on this matter.

The Big Questions

What do consumers want?

I think the average person would only care to differentiate mixes of a recording, if at all; that is, mono vs. stereo, remix vs. album edit vs. radio edit. This large group may include systematic users of MusicBrainz, such as BBC Music.

What do nerds want?

Some nerds want to be able to identify audio down to the bit-level; that is, accessing dynamic range information for a piece of audio (e.g., a track on a CD); viewing a waveform representation of said audio; a hash of the (digital) audio, for lookup purposes.

Otherwise, some nerds want to be able to discern different masters of particular mixes--perhaps a 1970s master vs. a clipped 2000s master or a 2000s master with far less dynamic range. Not at the anal bit-level mentioned above, but close enough.

Why should we care about the nerds?

I think such granularity is required if MusicBrainz desires to have the most quality database of unambiguous information, in fact it should be the absolute destination for music metadata; any extraneous "nerd" data should be able to be ignored if so desired by the common user--this should be reflected in the schema, web-services and most importantly the user interface.

We need only see point two of MusicBrainz's aims to recognise these long-term requirements:

MusicBrainz aims to be: The universal lingua franca for music by providing a reliable and unambiguous form of music identification, enabling both people and machines to have meaningful conversations about music.

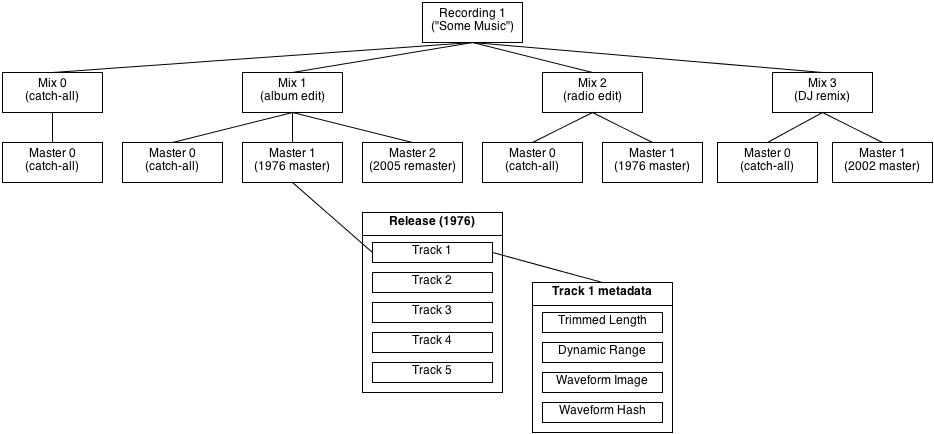

A Potential Solution

Here is one solution I've devised that I hope would cover practical use-cases mentioned above and more. I think the solution implemented must be the best starting point for additional features into the future, so that we're not stuck redefining and reimplementing existing features to suit. It's especially important to get these features correct sooner rather than later to limit corruption of data quality as the database grows exponentially.

Obviously, much work would need to be done on use-case and interface testing.

The Schema

Recordings

A conceptual entity which may represent a rough assemblage of studio or live takes, intended to be presented as a piece of recorded music. A bit "meta", unless it's representing a dry, one-track live recording.

Viewing a recording (via web service or the MusicBrainz website) should also return the nominal mix (most commonly occurring on tracklsts, or a flagged mix--see below).

Mixes

A particular arrangement of the elements of a recording. e.g. stereo mix, surround mix, overdub re-mix, karaoke mix. This is generally the level of granularity the consumer cares about.

We should perhaps have the ability to flag a single mix as the "nominal" mix, so that it may be returned with any request for a recording. It may be beneficial to particularly flag the album mix of a recording, so that it is better represented than a hypothetical hugely popular DJ remix.

How can one identify a mix?

Masters

A mix that has had it's audio levels modified to suit a delivery medium and/or artistic purpose. This closely represents the audio the consumer receives. Most people probably don't care about masters and may become overwhelmed with too many levels of granularity, so their existence must be veiled, unless explicitly requested, and be instead represented by their respective mixes.

Similar to above, it may be beneficial to flag a nominal master--this might be used to indicate a master that has suffered the least clipping and dynamic range compression.

When to differentiate masters?

- Gain?

- Extra silence at beginning/end?

- 0-1 seconds extra audio at beginning/end?

Display of master entities may be disabled--for editors and web services. Tracks would be shown to be related to mixes (track entities themselves would not be displayed either).

Tracks

Represents (in the case of digital audio) the bits written to a medium that represent a waveform (e.g. uncompressed CD audio, decoded MP3 waveform). This is for the nerds. Again, the existence of such entities must be hidden, unless requested, instead being directly represented by their parent mixes.

How do we handle digital releases that represent multiple lossy encodings--new release and track IDs for each encoding? Do we ignore lossy vendors and focus on lossless releases only (in terms of creating new track IDs and recording metadata)?

The User Interface

Catch-alls

These are the default entities recursively created when a new recording or mix is created. They are used by default when linking tracks, unless a more specific mix or master is created and used.

Extras

Acoustids

Attach Acoustids to mixes by default. If a master/track-level editor can verify an Acoustid as representing a particular master, remove the Acoustid from the mix (if it exists) and attach it to the master. The mix would inherit the Acoustid, so the general user would see no difference. This may be repeated for the same Acoustid if it belongs to several masters. Semantically, the Acoustid should always be represented at the mix level to allow for ambiguity.

Track entity features

The following might be processed by Picard:

- Waveform hash

- Dynamic Range calculation

- Low-resolution visual waveform (GIF/PNG data)

- Length calculation in ms (perhaps more universal?) or frames (after stripping silence from start and end)